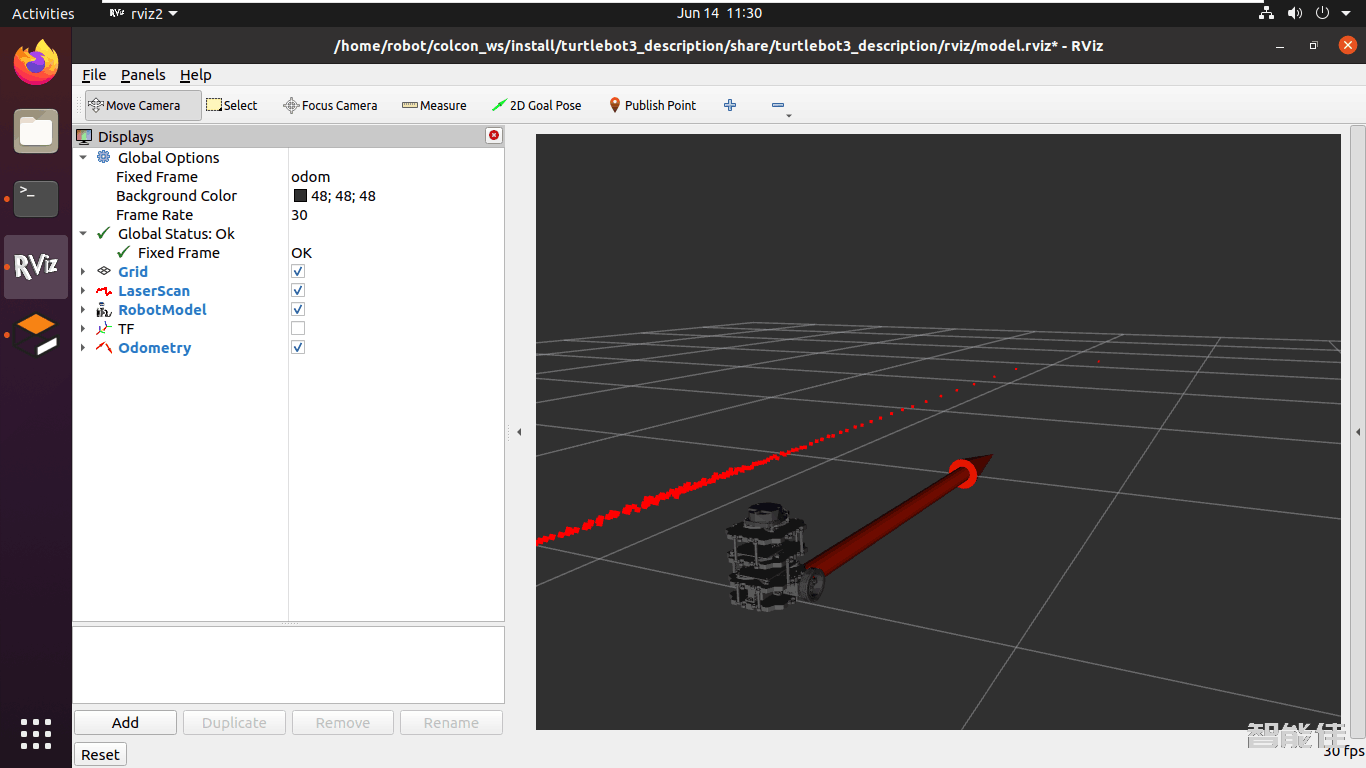

TurtleBot3-ROS2-Foxy教程-7.Rviz2模型展示

启动gazebo

ros2 launch turtlebot3_gazebo turtlebot3_house.launch.py

启动rviz2

ros2 launch turtlebot3_bringup rviz2.launch.py

效果如下:

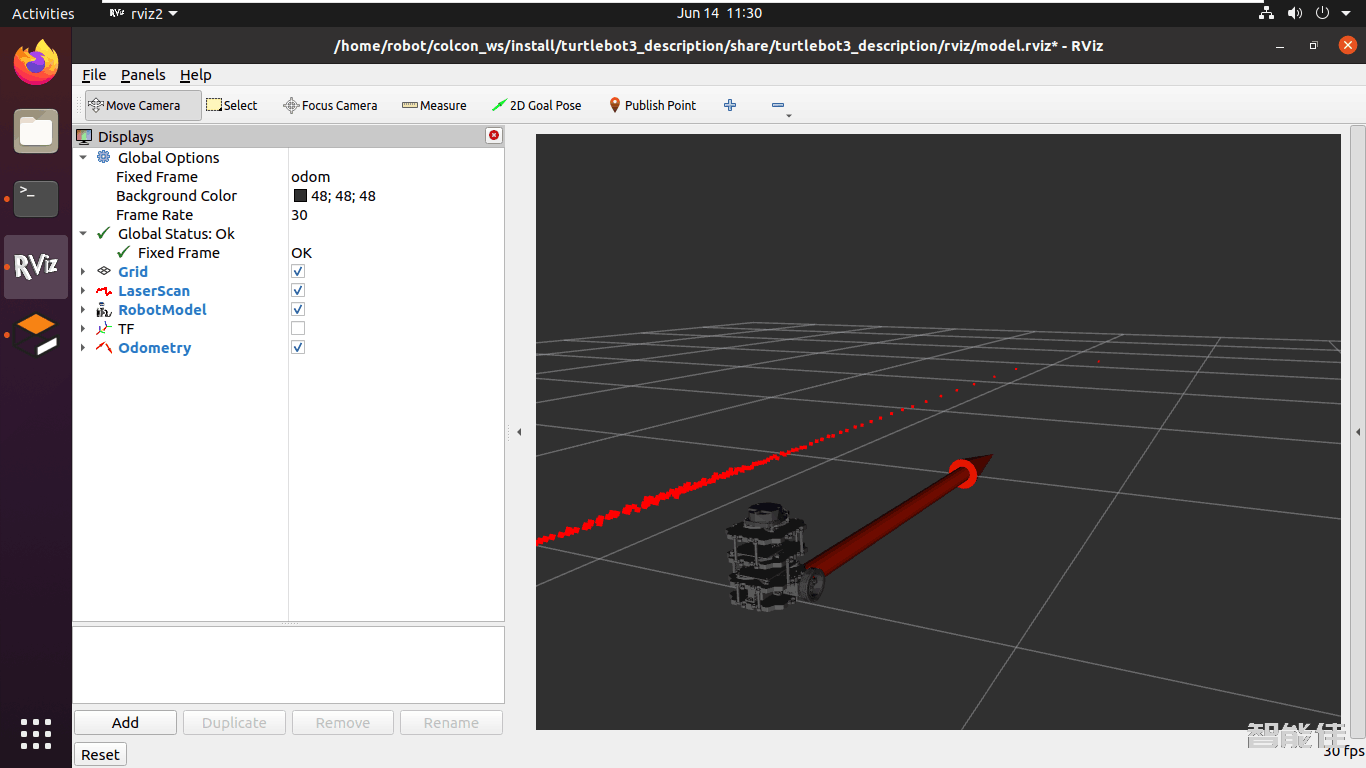

启动gazebo

ros2 launch turtlebot3_gazebo turtlebot3_house.launch.py

启动rviz2

ros2 launch turtlebot3_bringup rviz2.launch.py

效果如下: